Run Large Language Models Locally with Ollama

Have you ever wanted to delve into the fascinating world of large language models (LLMs) but been held back by the need for powerful hardware and cloud access? Ollama offers an exciting solution!

Ollama: Your Gateway to Local LLM Exploration

Ollama is a user-friendly platform that empowers you to run various LLMs directly on your local machine. This eliminates the need for expensive equipment or complex cloud setups, making LLM exploration more accessible and convenient than ever before.

Getting Started with Ollama in Simple Steps

1) Download Ollama:

- Visit https://ollama.com/ and select the installer tailored to your operating system (Windows, macOS, or Linux).

- Open a terminal or command prompt window.

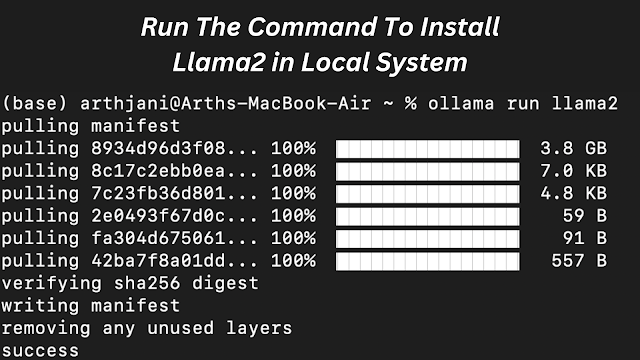

- Type the following command, replacing

llama2with the name of the LLM you wish to run:

ollama run <model_name>

- If the specified LLM is not yet downloaded locally, Ollama will automatically take care of it for you, ensuring a seamless startup experience.

Explore the LLM Landscape from Your Local Machine

Ollama provides a diverse range of pre-trained LLMs beyond llama2 to cater to your specific needs. Explore the available models at https://github.com/ollama/ollama to discover models that resonate with your interests. You can either browse by popularity or search for models by name to find the perfect fit.

Once you've identified a captivating LLM, simply substitute llama2 in the command with the desired model's name and execute it in your terminal. For instance, to run the code-llama model, you would use:

ollama run codellamaImportant Note Regarding RAM Requirements:

It's crucial to ensure your system meets the minimum RAM requirements for smooth LLM operation:

- 7B models: At least 8 GB of RAM

- 13B models: At least 16 GB of RAM

- 33B models: At least 32 GB of RAM

Unlocking the Potential of LLMs with Ollama

Ollama opens the door to a world of possibilities for developers, researchers, and anyone eager to explore the remarkable capabilities of large language models. Experiment with various LLMs for tasks like:

- Text generation and creative writing

- Text translation and language understanding

- Code completion and software development assistance

- Question answering and factual information retrieval

And much more! Ollama empowers you to delve deeper into the realm of LLMs without the constraints of specialized hardware or cloud configurations.

.png)